Chinese word segmentation is a very important part of Chinese natural language processing. It has a long history of research in both academic and industrial circles. There are also some mature solutions. Today, we invited two heavy guests Dr. Xu and Jason to go out and ask them to review the development of the Chinese word segmentation together with everyone, focusing on some of the more popular word segmentation methods based on machine learning.

Guest Profile

Dr. Xu, Ph.D., Language and Speech Laboratory, Johns Hopkins University. After graduating in 2012, he joined Microsoft headquarters. He has been engaged in natural language processing and machine learning related research and product development at Bing and Microsoft Research. He is a member of the cortana semantic understanding and dialogue system team and the wordflow input method team.

Jason graduated from the Natural Language Processing Group at Cambridge University. Has been engaged in natural language processing research and development work. Joining the door for more than three years, he is responsible for developing the semantic analysis system and dialogue system for outbound questions. The participating products include the mobile phone version, Ticwear intelligent voice watch interactive system, and magical small smart customer service dialogue system.

Part 1What is a word segmentation and why is it a word segmentation?

First of all, what I say is a word. “Words are the smallest linguistic units that can be used independently.†So what is the independent application? It can be interpreted as "single syntax components or grammatical functions alone." From the perspective of linguistic semantics, there are many related studies that give some definitions and decision labels for words. However, many Chinese words consist of only a single Chinese character, but more individual Chinese characters cannot "synthesize syntactic elements or act syntactically alone." Due to the commonly used NLP algorithm in the world, deep-level grammatical semantic analysis usually uses words as the basic unit. Many Chinese natural language processing tasks also have a pre-processing process to separate consecutive Chinese characters into more Linguistic semantically meaningful words . This process is called word segmentation.

Here is an example from (Xue 2003):

What do you say about Japanese fish?" Japanese octopus How do you say? Day article How do you say fish?

What I would like to emphasize is that although there is a relatively clear definition of words in linguistic semantics, for computer processing of natural language, word segmentation is often not universally accepted as a common standard . Because word segmentation itself is more of a process of preprocessing, judging its quality is more often required to be combined with downstream applications.

For example, in speech recognition, the creation of a language model usually requires word segmentation. From the perspective of recognition effects, the longer the words are, the higher the accuracy is (the acoustic model has a higher degree of discrimination). But in text mining, short words are often more effective, especially from the perspective of recall rates (Peng et al. 2002, Gao et al. 2005). In phrase-based machine translation, there have also been studies that found that short words (shorter than the Chinese treebank's standard) lead to better translation results (Chang et al. 2008). Therefore, how to measure the quality of word segmentation and how to choose a word segmentation method should be combined with its own application.

â–Ž word what common traditional methods?

Dictionary matching is the most common and most common method of word segmentation. Even in a statistical learning-based approach to be mentioned later, vocabulary matching is often a very important source of information (in the form of feature functions). Matching can be positive (left to right), reverse (right to left). For the segmentation ambiguity encountered in matching, the smallest number of separated words is usually selected.

Obviously, this method relies heavily on vocabularies. Once new words that do not exist in the vocabulary appear, the algorithm cannot be segmented correctly. However, vocabulary matching also has its advantages, such as easy to understand, independent of training data, can be closely combined with downstream applications (phrase tables in machine translation, lexicons in TTS, etc.), error correction, and so on.

There is also a method to estimate the relevance between adjacent Chinese characters by using some statistical features (such as the amount of mutual information) in the corpus data to achieve segmentation of the words. This type of method does not rely on vocabularies, and it has a great deal of flexibility, especially in the exploration of new words, but there are often problems with accuracy.

Therefore, many systems will combine vocabulary-based methods and non-vocabulary methods, and use the vocabulary method's accuracy as well as its advantages in combination with later-stage applications. At the same time, it uses the power of the no-vocabulary approach in recalling.

What are the applications of machine learning in word segmentation?Indeed, in the last ten years or so, the development of machine learning has been very rapid. New models and new algorithms have emerged one after another. Chinese word segmentation has become a testing ground for many new studies because of its importance and the clarity of the problem. Because there are so many things in this area, I want to divide the main models and methods into two categories as much as possible:

One is based on character tagging, which is to label each word separately .

There is another category that is based on words, which is the overall annotation and modeling of words .

The core of a single-word method is to annotate the position of each word in its own word. For any one word, it can be the Beginning of a word, the inside of a word, the ending of a word, or the word itself (Singleton), which is the sequence The commonly used BIES classification in the annotation. This division of the label space (model state space) is also very common in other tasks (such as NER), and there are also some similar variants, such as BIO commonly used in NER.

Speaking of this kind of method, it is necessary to talk about the Maximum Entropy Markov Model (MEMM) and the Conditional Random Field (CRF). Both of these are discriminative models. Compared to generative models (such as naive bayes, HMMs), there is a great deal of flexibility in the definition of feature functions.

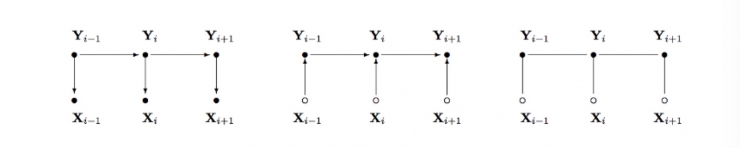

(The second is MEMM, the third is CRF, X is the sentence, and Y is the positional annotation.)

The MEMM is a locally normalized model. That is to say, in the position of each word, its corresponding probability distribution needs to be normalized. This model has a well-known problem of label bias, that is, the current tag is completely determined by the previous tag, and the current one is completely ignored. The word itself.

CRF solves this problem through global normalization. Both of these models were tested on participles (Xue et al. 2003, Peng et al. 2004, Tseng et al. 2005, etc) and achieved good results.

This type of model based on a single word, as its name implies, cannot directly relate to the correlation between adjacent words of the model, nor can it directly see the string corresponding to the entire word. Specific to the definition of the characteristic function in the MEMM and CRF, in the current position, the model cannot grasp such important features as the “current word†and the “words on both sidesâ€, but can only be replaced by character-based features. This often results in a loss of modeling effectiveness and efficiency.

The word-based model solves this problem well.

Many of the work in this piece will use similar transition-based parsing methods to solve the problem of word segmentation. Transition based parsing is an incremental, bottom-up approach to parsing. It generally processes the input of word-by-word processing from left to right, and saves the incomplete word segmentation result obtained so far through a stack during the running process, and determines how to integrate the current one through machine learning. Analyze the results, or receive the next input to expand the current analysis results.

Specifically for the task of segmentation, each word is entered and the algorithm decides whether the word is to expand the word already saved on the stack or to start a new word. One problem with this algorithm is that the number of analysis results saved to the current position on the stack will be very large (up to all possible possible word segmentation results so far), so pruning must be done to ensure that the search space is within controllable range.

There is another method based on the word-based model. Here is a brief mention (Andrew, 2006). It is similar to the transition parsing method just described. This model is Semi CRF (Sarawagi & Cohen 2004). There are many applications of this model. Many NERs within Microsoft use this model. It is essentially a high-order CRF that simulates the relevance of the segment level by extending the state space approach. It also uses the relevance between adjacent words for word segmentation. Like previous methods to do pruning, Semi-CRF also needs to limit the segment length in practical applications to control the complexity of searching for the optimal solution.

â–ŽWhat is the application of deep learning in word segmentation?

of course. In recent years, in-depth learning and development has been very rapid and has great influence, so this piece has to be specifically mentioned.

Like most tasks in natural language processing, deep learning is not surprisingly used for word segmentation. There have been a lot of related papers in recent years. Compared with the previous method, the changes brought about by deep learning are mainly the definition and extraction of features . Whether it is based on single-word or word-based, there has been work in this area in recent years.

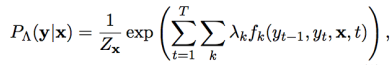

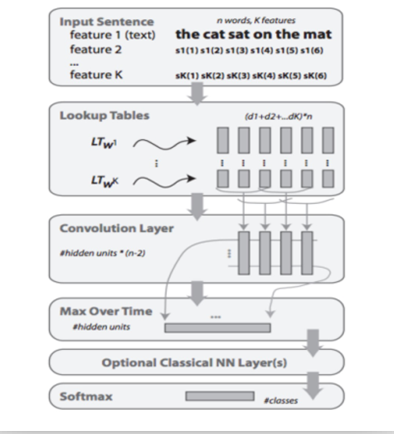

Based on single words, the deep learning method is used to mark the sequence of NLP fields. Actually, it was done in 2008 (Collobert & Weston 2008). The main method is to automatically extract features at each position through neural networks, and predict The annotation of the current position may also be combined with a tag transition model and an emission model output by the neural network, and the best annotation sequence may be extracted through viterbi. The progress in recent years has been mainly through more powerful neural networks to extract more effective information, thereby achieving improved word segmentation accuracy (Zheng et al. 2013, Pei et al. 2014, Chen et al. 2015, Yao et al. 2016, etc).

This is the basic version of collobert 2008. There is not much deviation in the structure of the recent work. The globally normalized CRF model can also be used to automatically extract features (DNN, CNN, RNN, LSTM, etc) from the neural network. This has been widely used in the NER and is also fully applicable to the task of word segmentation. I will not go into details.

The word-based transition based word segmentation mentioned earlier has also recently expanded into the field of deep learning . The original action model based on the linear model (continuing the current word or starting a new word) can also be implemented through a neural network, simplifying the feature. Definitions improve accuracy.

In addition to deep learning, what are the new directions for the development of word segmentation?

Deep learning is, of course, the latest and most important development direction in recent years. In addition, the method of Joint modeling is also worth mentioning .

Traditional Chinese natural language processing usually treats word segmentation as a pre-process, so the system is pipelined. One problem that this brings is error propagation. That is, the error of word segmentation will affect the later deeper semantic analysis of the language, such as POS tagging, chunking, parsing and so on. So there is also a lot of work in joint modeling in academia, the main purpose of which is to combine word segmentation with other more complex analytical tasks (Zhang & Clark 2010, Hatori et al. 2012, Qian & Liu 2012, Zhang et Al. 2014, Lyu et al. 2016, etc).

In recent years, due to the rapid development of neural networks, its powerful feature learning capabilities have also greatly simplified the work to be done on feature selection in the joint modeling of multiple tasks. One of the great benefits of federated modeling is that word segmentation and other tasks can share useful information. Word segmentation also takes into account the requirements of other tasks. Other tasks also consider the possibility of various segmentation, and globally the optimal solution can be achieved.

However, the problem is that the complexity of the search is often significantly improved: more effective pruning mechanisms are needed to control the complexity without significantly affecting the search results.

Part 2â–Ž Chinese word segmentation in the semantic analysis of the application?

Ok! Thank you, Dr. Xu, for the detailed description of the Chinese word segmentation algorithms. Because, as Dr. Xu said, Chinese word segmentation is the basis of most downstream applications. These downstream applications range from POS parts tagging, NER named entity recognition, to text classification, language models, and machine translation. So I give a few basic examples to answer how to use the semantic analysis after the Chinese word segmentation. Of course, one thing that needs to be emphasized in advance is that some of the algorithms discussed here (including many of the mainstream algorithms in the academic community) are language-independent and all use words as the smallest unit.

So for Chinese, as long as we do a good participle (and the accuracy of the current word segmentation is still quite good, can reach 96% F-score Zhang et.al 2016), you can dock with the now more mainstream English NLP algorithm.

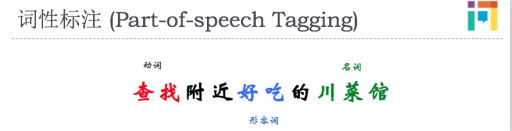

Let's talk about part of speech tagging first.

The so-called part-of-speech tagging, in short, is to judge whether a word is a noun, a verb, an adjective, etc. on the result of word segmentation. This is generally done as a sequence labeling question, because: The judgment can be based on the word itself (for example, the word "open" can be guessed as a verb in most cases without looking at the context). It can also be based on the previous word. It is given by part of speech (such as "Open XX". Although you don't know what XX is, it is probably a noun after following the verb "Open".)

For another example, named entity recognition.

After obtaining the word segmentation result and knowing the word part of each word, we can do Named Entity Recoginition on this basis. Academically, so-called named entities are generally referred to as PERSON, LOCATION, ORGANIZATION, and so on. Of course, in actual commercial products, the categories of named entities will be much more subdivided according to the needs of different business scenarios. For example, place names may distinguish between provinces, cities, counties, restaurants, hotels, movie theaters, and so on.

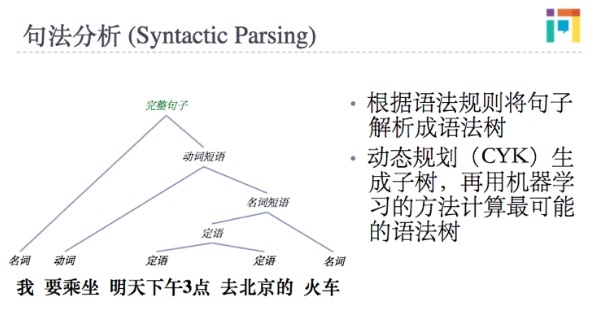

After we get segmentation results and part of speech, we can also build a syntax tree.

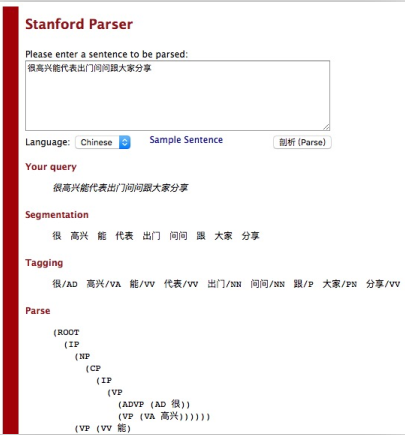

The output contains the grammatical structure of the entire sentence, such as noun phrases, prepositions, and so on. As shown in FIG. Finally, we recommend that you go to the Stanford NLP website and try it for yourself at http://nlp.stanford.edu:8080/parser/index.jsp.

This demo shows how to start a sentence, how to do segmentation, POS tagging, Syntactic Parsing, and dependency analysis, referential resolution, and all kinds of basic NLP applications. Try it yourself and you can have a more intuitive understanding of a series of basic NLP methods such as word segmentation.

What are the difficulties encountered in practical applications?

Many NLP algorithms in the academic world are put into real application scenarios. Facing the ever-changing human natural language, all kinds of difficulties will be encountered. I would like to share with us some of the experiences and difficulties encountered in doing Chinese word segmentation in the past few years. Make a small advertisement first. There may be friends in the group who are not very familiar with us. Going out and asking questions was established in 2012. It is an artificial intelligence startup company with voice search as its core. We have done 4 years of voice search, from the mobile version of the App, to watch, car, carrier may be different, but the core is our voice + semantics this set of voice search system.

For example, as shown in the figure above, the user enters a voice by voice: “Nanjing Food Stall near Jiaotong Universityâ€. This speech is converted into a natural language text by speech recognition and then processed by our semantic analysis system. Our semantic analysis system goes through a series of word segmentation, part-of-speech tagging, and named entity recognition. Then we can determine what the subject of the query query is (book restaurant, navigation, weather, etc.) and extract the relevant keywords. Hand over to the search team for results search and display.

Because the outbound question is focused on life information query, it is very important to correctly identify the entity name (POI, movie name, person name, music name etc.) in the query. The NLP system that goes out to ask questions also uses the word segmentation as the basis of the entire NLP system: The premise of correctly identifying the entity name is that the entire entity name is correctly and completely segmented.

Therefore, our participle needs to be biased towards the entity name. As Dr. Xu mentioned earlier, word segmentation does not have a “one-size-fits-all†criterion; then, in the out-of-the-box NLP, one of the most important criteria for applying the word segmentation is to be able to cut out these entity names correctly.

Our participle relies heavily on the entity vocabulary, but in practice it has encountered great difficulties.

One is the large amount of data in the entity word list. For example, just POI points (information points) are tens of millions, and there are always new restaurants and hotels that cannot be covered.

The second is loud noise. What "I love you" and "The weather is good" are the names of restaurants we found in real vocabularies, not to mention the strange song titles.

These real-world problems have created great difficulties for word segmentation in statistical systems. Therefore, we have made some efforts to solve these problems.

1) Establish a sound new word discovery mechanism, regularly supplement our POI words, and build as many as possible more comprehensive entity word list libraries. However, more entity vocabularies also bring more noise, causing problems for segmentation and subsequent semantic recognition.

2) We will use machine learning methods to remove noise words in the entity vocabulary. The so-called noise words are the words in the restaurant's vocabulary that people don't seem to think of as "restaurants" (such as "I love you" or "weather is fine"), or words in the song title that are not generally considered to be songs (such as "nearby"), and so on. Our algorithm will automatically filter out this low-confidence entity word to avoid noise.

3) We also tried to connect to the Knowledge Graph to use more informative information to help correct segmentation. To give a real example, "No. 56 Gaojie Street," the general word segmentation program will be divided into "Gaojie / 56/", but if there are friends in Jinan, the group may be recognized at a glance. This is a very The name of a famous chain restaurant. In combination with our Knowledge Grahp (KG), if the user's current address is in Jinan, then even if our noise-removing algorithm thinks that “No. 56 Gaojie Street†is unlikely to be a restaurant, we will consider the information given by KG. , correctly identify it as a restaurant.

At the same time, we are also actively trying out some new methods, such as accepting a variety of ambiguous participles, but using the evaluation of the final search results to sort the best answer. Of course, the overall effect of Chinese word segmentation is still acceptable. After our sampling error analysis, the semantic analysis caused by word segmentation is wrong, and the proportion is relatively low. In the long term, we will still trust the results provided by the current word segmentation system.

summary:

Chinese word segmentation is an inevitable process in the NLP problem. Recently, with the advent of deep learning, many people have begun to hope that this new machine learning algorithm can bring something new to it. This article asks the two senior researchers from what is the traditional method of Chinese word segmentation, Chinese word segmentation, Chinese word segmentation combined with deep learning and Chinese word segmentation in the semantic analysis. The problems encountered in the practical application of their products are: From the shallow to the deep, from the theory to the application of a vivid science, we showed the wonderful collision between the Chinese word segmentation and commercial products.

Attachment: Dr. Xu's reference.

Peng et al. 2002, Investigating the relationship between word segmentation performance and retrieval performance in Chinese IR

Gao et al. 2005, Chinese word segmentation and named entity recognition: A pragmatic approach

Chang et al. 2008, Optimizing Chinese Word Segmentation for Machine Translation Performance

Zhang & Clark 2007, Chinese Segmentation with a Word-Based Perceptron Algorithm

Sarawagi & Cohen 2004, Semi-Markov Conditional Random Fields for Information Extraction

Andrew 2006, A hybrid markov/semi-markov conditional random field for sequence segmentation

Collobert & Weston 2008, A Unified Architecture for Natural Language Processing: Deep Neural Networks with Multitask Learning

Zheng et al. 2013, Deep learning for Chinese word segmentation and POS tagging

Pei et al 2014, Maxmargin tensor neural network for chinese word segmentation

Chen et al. 2015, Gated recursive neural network for chinese word segmentation

Chen et al. 2015, Long short-term memory neural networks for chinese word segmentation

Yao et al. 2016, Bi-directional LSTM Recurrent Neural Network for Chinese Word Segmentation

Zhang et al. 2016, Transition-Based Neural Word Segmentation

Zhang & Clark 2010, A fast decoder for joint word segmentation and pos-tagging using a single discriminative model

Hatori et al. 2012, Incremental joint approach to word segmentation, pos tagging, and dependency parsing in chinese

Qian & Liu 2012, Joint Chinese Word Segmentation, POS Tagging and Parsing

Zhang et al. 2014, Character-Level Chinese Dependency Parsing

Lyu et al. 2016, Joint Word Segmentation, POS-Tagging and Syntactic Chunking

Rectifier bridge is to seal the rectifier tube in a shell. Points full bridge and half bridge. The full bridge connects the four diodes of the connected bridge rectifier circuit together. The half bridge is half of four diode bridge rectifiers, and two half bridges can be used to form a bridge rectifier circuit. One half bridge can also be used to form a full-wave rectifier circuit with a center-tapped transformer. Select a rectifier bridge to consider. Rectifier circuit and operating voltage.

Bridge Rectifier,Original Bridge Rectifier,Full Bridge Rectifier ,Diodes Bridge Rectifier,Single Phase Rectifier Bridge, Three Phase Bridge Rectifier

YANGZHOU POSITIONING TECH CO., LTD. , https://www.yzpst.com