In 2010, the number of deaths from traffic accidents worldwide reached 1.2 million. In the United States alone, there are 6 million traffic accidents each year, resulting in economic losses of $160 billion. Car accidents continue to be the leading cause of death among people between the ages of 4 and 34. And 93% of these traffic accidents are caused by human error, and in most cases are caused by negligence.

TI's Advanced Driver Assistance System (ADAS) application processor team is developing new technologies to reduce the number of incidents and develop an autonomous driving experience by leveraging innovative semiconductor devices. Unmanned systems can reduce the number of collisions due to increasing reliability and faster reaction times than drivers.

The ADAS features include a camera and radar sensors on the front, side and rear of the vehicle to provide additional “eyes†and “ears†to the vehicle to “feel†the surrounding environment. The raw data collected by the sensor is processed by a sophisticated algorithm and the useful information obtained is provided to the driver in multiple alarms. This allows the driver to react, while in more advanced systems, the driver can also control the steering and/or braking of the vehicle to actively avoid accidents.

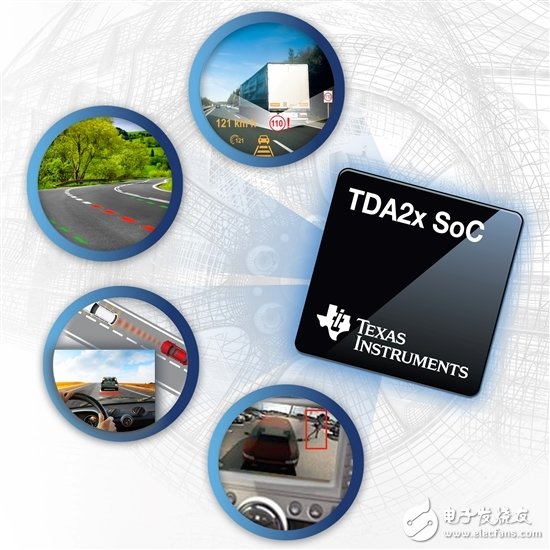

In high-level applications, these applications fall into the following categories: front camera, parking assist, radar, and sensor fusion. Lane Departure Warning (LDW) is one of many applications driven by ADAS's front camera technology. This app alerts you when you accidentally drive a vehicle out of the lane. The lane keeping assist is a high version of the LDW, which automatically places a torque on the steering wheel to keep the vehicle in the middle of the lane without any driver intervention. Pedestrian detection, front-to-back collision avoidance, adaptive cruise control, and blind spot assistance are other ADAS applications currently used on vehicles. Systems that support the ADAS algorithm have high demands on performance, integration, and power consumption.

TI's new TDA2x SoC family of devices, along with a heterogeneous scalable architecture, provides the best solution. The Vision AcceleraTIonPac leverages the capabilities of the Embedded Vision Engine (EVE) to work with industry-leading DSP and ARM® cores. Each embedded vision engine in Vision AcceleraTIonPac delivers up to 8x more computing performance for advanced vision analysis in a cost-effective package with the same energy budget, enabling the most extensive and state-of-the-art ADAS application library Actual effect.

At present, the range of applications in the ADAS field (front camera, full-cycle image and sensor data fusion) can be realized on a common architecture with scalability, with faster time to market and lower investment level, because multiple applications can share Common algorithm. For the front camera, more than 5 ADAS applications can be supported simultaneously with less than 3W of power. Multiple flexible video input and output ports, video decoding, along with Ethernet AVB and a powerful graphics engine, enable multi-camera 3D parking assist systems based on low voltage differential signaling (LVDS) and Ethernet to add around a vehicle A virtual image of the environment. For data fusion, the TDA2x SoC acts as a central processor for pre-processing data from multiple ADAS sensors to support more robust decision making. One example is the data fusion of the front camera and the front LR radar.

With the sophisticated technology that TDA2x SoC continues to achieve, a new, reinterpreted driving experience will be in front of you!

Sunset Lamp,Room Lights,Led Ceiling Lights,Kitchen Ceiling Lights

Jiangmen soundrace electronics and technology co.,ltd. , https://www.soundracegroup.com