In 2016, it was the first year of the Chinese drone market. UAVs were able to enter the public's field of vision and quickly developed in the mass market. Many people did not expect it. From the initial aerial recording to the later real-time recording, the convenient drone image transmission function undoubtedly added enough chips to the drone and earned enough attention. The blogger will analyze the technology of drone graphics.

Concept

From the name of "Pictures", it can be found that this is not a professional definition, probably developed from the mouth of some senior model players. Professional aerospace vehicles do not have separate video image transmission equipment. The concept of picture transmission only exists in the field of consumer drones.

Limit

1. Cost:

There is no need to doubt how fast and how fast communication can be. Wireless communication technology has developed to this day. No one doubts the 1080P image returned by Mars.

The transmission of drones over 100 kilometers is not impossible, but the price of more than one million yuan is relatively expensive.

At present, the price of 1080P image transmission products on the market is basically less than 1,700 US dollars, and the cost has become the first limitation of the design of consumer drones.

2. Law:

The highest legal document for China's radio management is the Radio Regulations of the People's Republic of China. The legislature is the State Council and the Central Military Commission, and is supervised by radio regulatory agencies at all levels. If the user wishes to apply for a separate license for the picture, the picture transmission must first obtain the "Radio Transmission Equipment Type Approval Certificate", which is based on the provisions of the National Radio Frequency Division Regulations on the technical specifications of the radio transmission equipment. Obtaining a professional radio license is not inoperable, but there is no way to promote it in the field of consumer drones.

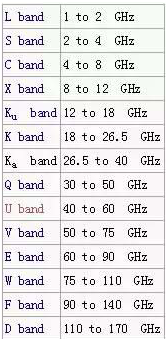

For professional aerospace vehicles, special measurement and control frequency bands have been reserved for spectrum division, and consumer UAVs can only be succumb to the ISM of ITU-R (ITU Radio Communication Sector). Frequency band (Industrial Scientific Medical).

13.56Mhz, 27.12Mhz, 40.68MHz, 433Mhz, 915Mhz, 2.4Ghz, 5.8GHz are all required to be launched within 1W without a license;

Bandwidths of 433MHz and below are often difficult to meet the bandwidth requirements of HD graphics transmission;

Half of the 915Mhz band has been occupied by GSM;

L-wave bandwidth is not rich;

The S-band 2.4GHz has become the first choice for 1080P to obtain long-distance, but 4K or higher-definition image transmission designers find it difficult to find cheaper on the S-band bandwidth;

C-band 5.8G can be made wider, but the same transmission power and receiving sensitivity 5.8G compared with 2.4G communication distance is only 41.4%, and its attenuation is more sensitive to water vapor, the actual communication distance is less than 30 %, both have pros and cons.

Figure 1 Wireless spectrum

3. Coding technology

1.Software/hardware structure: OpenMAX IL + Venus

2. Coding standard: H.264 (APQ8074) / H.265 (APQ8053)

3. Rate control: The so-called CBR in CBR (Costant Bit Rate) network transmission is generally ABR (average code rate), that is, the average code rate per unit time is constant, and the coded output buffering can play a role of smooth fluctuation.

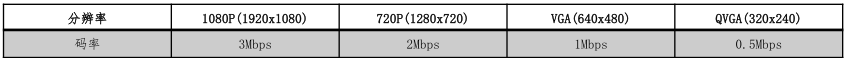

Figure 2 code rate

4. Rate/frame rate adaptation: Dynamic video rate adaptation (rave) is a library of algorithms provided by Qualcomm, based on varying Wi-Fi bandwidth and channel quality, to calculate the appropriate video stream rate and frame rate, which helps To minimize delay and image corruption issues.

5.I frame interval adjustment: 30 frames or 60 frames for one I frame at 30 fps frame rate. Can achieve higher image quality at lower bit rates.

6. I frame retransmission: If the I frame is lost or damaged, the image will be stuck for a long time. When the receiving end feeds back this situation, the transmitting end immediately retransmits the I frame, which will reduce the card 7.

8. I frame carries SPS/PPS information: the lack of SPS/PPS information, the receiving end will not be able to decode correctly, so the stream needs to bring this information to prevent the black screen after the disconnection is reconnected.

General agreement

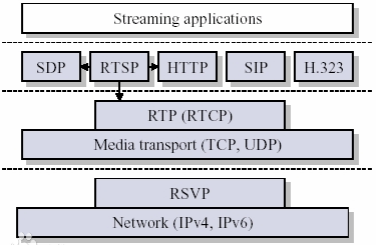

RTP

1.1. The protocol is simple and easy to group

1.2.jrtp open source library: X license, almost unlimited.

1.3. Optimized for H.264/H.265 coding features: different grouping strategies.

1.4. Extended configurable packet transmission interval: Balance the code rate fluctuation to prevent the instantaneous code rate from being too large.

1.5. Use RTP extension header: Pass the frame number for data synchronization of the algorithm.

1.6. Using memory pools: Reduce memory copy between modules and reduce latency.

Figure 3 RTP

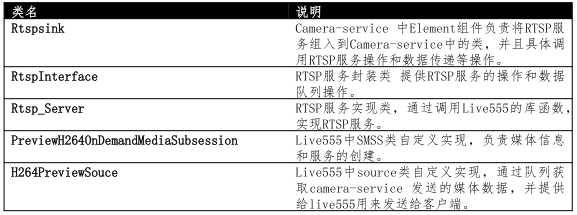

2.RTSP

2.1. Support for multicast: Live555 open source library

2.2. LGPLv2.1 license, which can be referenced in commercial software.

2.3. Related class description

Figure 4 RTSP related classes

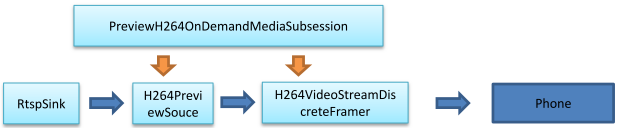

2.4. Data Transfer Schematic: After RTSP server receives RTSP, PreviewH264OnDemandMediaSubsession creates H264PreviewSouce class and H264VideoStreamDiscretef ramer class. H264PreviewSouce obtains h264 data from Rtspsink through queue, and then sends it to mobile phone.

Figure 5 RTSP data stream

3. Problems encountered in the development of the map

In the real-time playback process, the most difficult problem to solve is the image jam, the image vase problem, and the image performance is different in each mobile phone. On the mobile phone with good performance, there will be a situation where the image is shaken sharply.

To solve the problem of image jam, you must first know the reason for Caton:

1. Lost by data during transmission, no data, caused by

2. The receiving end of the app is not timely, causing data loss and causing the Caton

3. In order to reduce the flower screen, the resulting card, for example, just lost the i frame, in order to display the next screen, the next p frame will be discarded until the next i frame starts to display.

We all know that the reason for the flower screen is caused by the frame loss, such as the loss of the i frame, the key frame, the subsequent p frame sent to the ffmpeg decoded image is a flower screen, or mosaic, etc. (there is also a big p, The statement of small p, here is not detailed), [Note that this transmission process does not use b frames, the entire transmission process only two frames i frame, a p frame], a little more screen, can reduce the Karton, customers More acceptable is the Caton, not the flower screen.

solution:

The first problem: the data is lost in the transmission process, there is no data, the card is caused, there is the influence of the external environment, the stability effect of the signal transmission signal, etc., the app end has no good solution, nothing more than Two choices, one for tcp transfer and one for udp transfer. According to the actual measurement, the tcp effect is better.

Tcp: The data transmission process can ensure the integrity of the data, so the screen is less, the distance will be closer to the upd.

Udp: The transmission process does not guarantee the integrity of the data, it is easy to screen, and the distance is relatively long.

The second problem: the app is not received in time, causing data loss caused by the card, the situation I encountered here is this, the previous received data and decoding the same thread, showing another thread, there is a situation If the decoding is not timely, it will cause the receiving thread to block, thus affecting the data reception (udp). The solution is to receive the data itself, decode and display a thread, and use the cache queue to share the data, that is, increase the cache. Basically all online play is done this way.

The third question: depending on the customer's needs, I will lose it directly in order not to spend the screen.

The project uses mpv+EventBus in a very flexible way. The module replacement, multiplexing, and rewriting are very flexible, and the java layer is not particularly necessary. Generally, it will not move. All aspects of optimization are in the jni layer, and mainly in the image. Optimization, which also facilitates the iteration of the version, or how painful it is to upgrade the client version.

Sharing is the source of human progress, please refer to:

Http://blog.csdn.net/ad3600/article/details/54706102

Http://blog.csdn.net/tpyangqingyuan/article/details/54574977

male-female connector,luminarie disconnect,Wire Terminal Block Led Connectors,Electric Wire Plastic Connector

Guangdong Ojun Technology Co., Ltd. , https://www.ojunconnector.com